Detect #17: LLM-induced outage; get more out of OTel with CREs; Google & Cloudflare incidents ...

An adventure through the vibrant world of problem detection, where every post is a mix of expert insights, community wisdom, and tips, designed to turbocharge your expertise.

Join the newsletter:

Popular articles

Welcome to the August edition of Detect — a monthly roundup of outages, monitoring tips, debugging stories, and more from the reliability community.

We're brought to you by Prequel, the creators of the new 100% open-source problem detection projects: CRE and preq. Check them out and leave a ⭐.

Here's a digest of what's new in the world of problem detection...

Alerting Tips & Tricks 💡

- Marry OpenTelemetry with community CREs to turn noisy telemetry into proactive detections (prequel)

- Three alerting rules Hugging Face relies on to avoid incidents (huggingface)

- Proactively Detect and Resolve Istio Issues - Brand new CREs proactively catch common Istio ambient mode issues before (prequel)

Notable Incidents 🔥

- Webflow disruption (28 Jul) — A high-volume attack overwhelmed Webflow’s primary Postgres cluster, freezing Designer, Dashboard, and Publish for ~72 h; engineers re-sharded to faster single-socket nodes and blocked the attacker (webflow) | (cdn.prod.website-files)

- Google Workspace errors (18 Jul) — An accidental disconnect of a top-of-rack switch in us-central1 partitioned Gmail, Drive, Docs, and Meet control-plane traffic, returning 500/502s for 46 mins before traffic was rerouted and hardware replaced (google)

- Cloudflare 1.1.1.1 DNS outage (14 Jul) — A misscoped internal deployment withdrew anycast prefixes for the public resolver, making 1.1.1.1 unreachable worldwide for 62 min; change controls and testbeds were tightened afterward (blog.cloudflare)

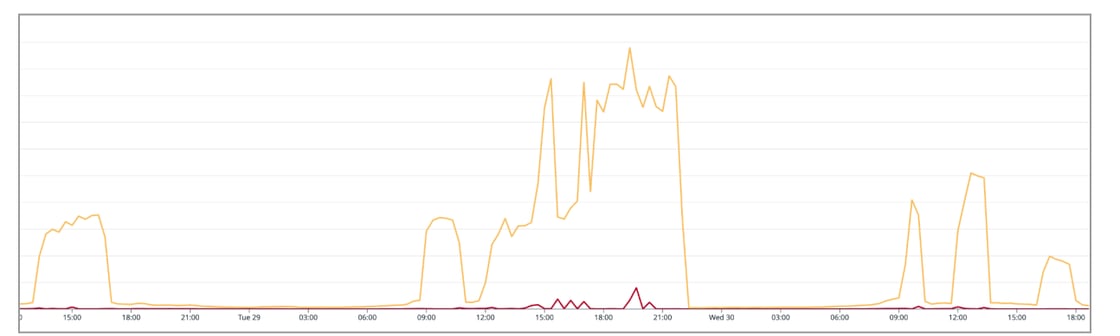

- "Our first outage from LLM-written code" (15 Jul) — An LLM-generated refactor changed a break to continue, causing an infinite loop that spiked CPU and stalled sync writes; reverting the commit restored stability and prompted new AI-code safeguards (sketch.dev)

Hands-On Debugging 🔎

- Pinterest’s “one-in-a-million” search failure — migrating to Kubernetes exposed a rare fault only at multi-terabyte index scale; bisection + rr replay helped solve the mystery. (pinterest)

Fresh Ideas 🤔

- Cloudflare and the infinite sadness of migrations — why “big-bang” cut-overs still bite in 2025 (surfingcomplexity)

- Why reliability is hard at scale — Pragmatic Engineer on compound complexity and talent pipelines (pragmaticengineer)

- The four-bullet incident snapshot — a concise template that speeds exec briefings (joshbeckman)

- “Best practices” aren’t always best — context before canon when copying reliability playbooks (thefridaydeploy)

Architecting for Reliabilty 🏗️

- Jet-lag the traffic — Nebula TV eases launch spikes by gradually shifting viewers across PoPs (nebula.tv)

- Monzo stand-in platform — A lean backup bank (18 services in GCP) keeps payments flowing if AWS core fails (leaddev)

Tools 🛠️

- dlg — a minimal API for printf-style debugging (github).

- Zig profilers roundup — survey of perf, hotspot, and new JIT-assisted profilers for Zig projects (bugsiki)

Whether you're on call this week, looking to improve system reliability, or simply keeping up with the latest tips & tricks, we’re happy to be a part of your day.

Follow us on X: @prequel_dev @detect_sh

Did someone forward you this email? Join our mailing list so you'll be the first to know.

.png)